ReActor Node for ComfyUI (Face Swap)

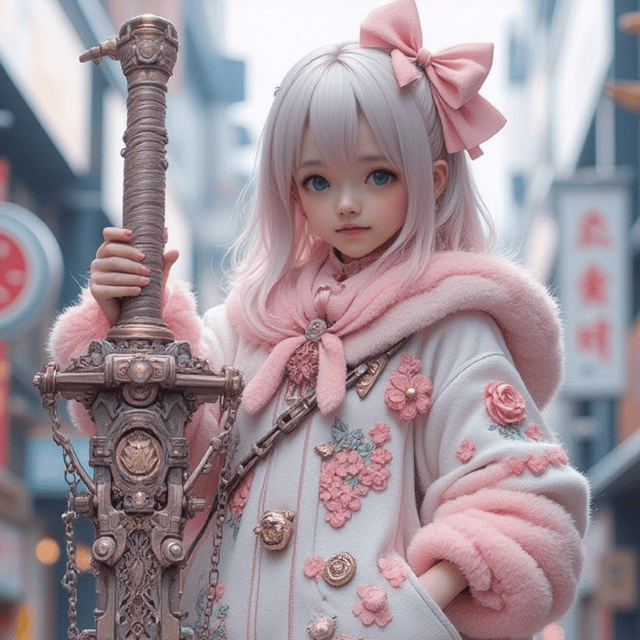

ReActor Node for ComfyUI 👉Downlond👈 https://github.com/lingkops4/lingko-FaceReActor-Nodeworkflowhttps://github.com/lingkops4/lingko-FaceReActor-Node/blob/main/face_reactor_workflows.jsonThe Fast and Simple Face Swap Extension Node for ComfyUI, based on ReActor SD-WebUI Face Swap ExtensionThis Node goes without NSFW filter (uncensored, use it on your own responsibility)| Installation | Usage | Troubleshooting | Updating | Disclaimer | Credits | Note!✨What's new in the latest update✨💡0.5.1 ALPHA1Support of GPEN 1024/2048 restoration models (available in the HF dataset https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models)👈[]~( ̄▽ ̄)~*ReActorFaceBoost Node - an attempt to improve the quality of swapped faces. The idea is to restore and scale the swapped face (according to the face_size parameter of the restoration model) BEFORE pasting it to the target image (via inswapper algorithms), more information is here (PR#321)InstallationSD WebUI: AUTOMATIC1111 or SD.NextStandalone (Portable) ComfyUI for WindowsUsageYou can find ReActor Nodes inside the menu ReActor or by using a search (just type "ReActor" in the search field)List of Nodes:••• Main Nodes •••💡ReActorFaceSwap (Main Node Download)👈[]~( ̄▽ ̄)~*ReActorFaceSwapOpt (Main Node with the additional Options input)ReActorOptions (Options for ReActorFaceSwapOpt)ReActorFaceBoost (Face Booster Node)ReActorMaskHelper (Masking Helper)••• Operations with Face Models •••ReActorSaveFaceModel (Save Face Model)ReActorLoadFaceModel (Load Face Model)ReActorBuildFaceModel (Build Blended Face Model)ReActorMakeFaceModelBatch (Make Face Model Batch)••• Additional Nodes •••ReActorRestoreFace (Face Restoration)ReActorImageDublicator (Dublicate one Image to Images List)ImageRGBA2RGB (Convert RGBA to RGB)Connect all required slots and run the query.Main Node Inputsinput_image - is an image to be processed (target image, analog of "target image" in the SD WebUI extension);Supported Nodes: "Load Image", "Load Video" or any other nodes providing images as an output;source_image - is an image with a face or faces to swap in the input_image (source image, analog of "source image" in the SD WebUI extension);Supported Nodes: "Load Image" or any other nodes providing images as an output;face_model - is the input for the "Load Face Model" Node or another ReActor node to provide a face model file (face embedding) you created earlier via the "Save Face Model" Node;Supported Nodes: "Load Face Model", "Build Blended Face Model";Main Node OutputsIMAGE - is an output with the resulted image;Supported Nodes: any nodes which have images as an input;FACE_MODEL - is an output providing a source face's model being built during the swapping process;Supported Nodes: "Save Face Model", "ReActor", "Make Face Model Batch";Face RestorationSince version 0.3.0 ReActor Node has a buil-in face restoration.Just download the models you want (see Installation instruction) and select one of them to restore the resulting face(s) during the faceswap. It will enhance face details and make your result more accurate.Face IndexesBy default ReActor detects faces in images from "large" to "small".You can change this option by adding ReActorFaceSwapOpt node with ReActorOptions.And if you need to specify faces, you can set indexes for source and input images.Index of the first detected face is 0.You can set indexes in the order you need.E.g.: 0,1,2 (for Source); 1,0,2 (for Input).This means: the second Input face (index = 1) will be swapped by the first Source face (index = 0) and so on.GendersYou can specify the gender to detect in images.ReActor will swap a face only if it meets the given condition.💡Face ModelsSince version 0.4.0 you can save face models as "safetensors" files (stored in ComfyUI\models\reactor\faces) and load them into ReActor implementing different scenarios and keeping super lightweight face models of the faces you use.To make new models appear in the list of the "Load Face Model" Node - just refresh the page of your ComfyUI web application.(I recommend you to use ComfyUI Manager - otherwise you workflow can be lost after you refresh the page if you didn't save it before that).TroubleshootingI. (For Windows users) If you still cannot build Insightface for some reasons or just don't want to install Visual Studio or VS C++ Build Tools - do the following:(ComfyUI Portable) From the root folder check the version of Python:run CMD and type python_embeded\python.exe -VDownload prebuilt Insightface package for Python 3.10 or for Python 3.11 (if in the previous step you see 3.11) or for Python 3.12 (if in the previous step you see 3.12) and put into the stable-diffusion-webui (A1111 or SD.Next) root folder (where you have "webui-user.bat" file) or into ComfyUI root folder if you use ComfyUI PortableFrom the root folder run:(SD WebUI) CMD and .\venv\Scripts\activate(ComfyUI Portable) run CMDThen update your PIP:(SD WebUI) python -m pip install -U pip(ComfyUI Portable) python_embeded\python.exe -m pip install -U pip💡Then install Insightface:(SD WebUI) pip install insightface-0.7.3-cp310-cp310-win_amd64.whl (for 3.10) or pip install insightface-0.7.3-cp311-cp311-win_amd64.whl (for 3.11) or pip install insightface-0.7.3-cp312-cp312-win_amd64.whl (for 3.12)(ComfyUI Portable) python_embeded\python.exe -m pip install insightface-0.7.3-cp310-cp310-win_amd64.whl (for 3.10) or python_embeded\python.exe -m pip install insightface-0.7.3-cp311-cp311-win_amd64.whl (for 3.11) or python_embeded\python.exe -m pip install insightface-0.7.3-cp312-cp312-win_amd64.whl (for 3.12)Enjoy!II. "AttributeError: 'NoneType' object has no attribute 'get'"This error may occur if there's smth wrong with the model file inswapper_128.onnx💡Try to download it manually from here and put it to the ComfyUI\models\insightface replacing existing oneIII. "reactor.execute() got an unexpected keyword argument 'reference_image'"This means that input points have been changed with the latest updateRemove the current ReActor Node from your workflow and add it againIV. ControlNet Aux Node IMPORT failed error when using with ReActor NodeClose ComfyUI if it runsGo to the ComfyUI root folder, open CMD there and run:python_embeded\python.exe -m pip uninstall -y opencv-python opencv-contrib-python opencv-python-headlesspython_embeded\python.exe -m pip install opencv-python==4.7.0.72That's it!reactor+controlnetV. "ModuleNotFoundError: No module named 'basicsr'" or "subprocess-exited-with-error" during future-0.18.3 installationDownload https://github.com/Gourieff/Assets/raw/main/comfyui-reactor-node/future-0.18.3-py3-none-any.whlPut it to ComfyUI root And run:python_embeded\python.exe -m pip install future-0.18.3-py3-none-any.whlThen:python_embeded\python.exe -m pip install basicsrVI. "fatal: fetch-pack: invalid index-pack output" when you try to git clone the repository"Try to clone with --depth=1 (last commit only):git clone --depth=1 https://github.com/Gourieff/comfyui-reactor-nodeThen retrieve the rest (if you need):git fetch --unshallow

![GGUF and Flux full fp16 Model] loading T5, CLIP](https://image.tensorartassets.com/cdn-cgi/image/anim=false,plain=false,w=112,q=85/article/661700808131333918/7e873e86-a991-4c0f-964d-f81b3f80c54a.png)