What is Stable Video Diffusion (SVD)?

Stable Video Diffusion (SVD) from Stability AI, is an extremely powerful image-to-video model, which accepts an image input, into which it “injects” motion, producing some fantastic scenes.

SVD is a latent diffusion model trained to generate short video clips from image inputs. There are two models. The first, img2vid, was trained to generate 14 frames of motion at a resolution of 576×1024, and the second, img2vid-xt is a finetune of the first, trained to generate 25 frames of motion at the same resolution.

The newly released (2/2024) SVD 1.1 is further finetuned on a set of parameters to produce excellent, high-quality outputs, but requires specific settings, detailed below.

Why should I be excited by SVD?

SVD creates beautifully consistent video movement from our static images!

How can I use SVD?

ComfyUI is leading the pack when it comes to SVD image generation, with official SVD support! 25 frames of 1024×576 video uses < 10 GB VRAM to generate.

It’s entirely possible to run the img2vid and img2vid-xt models on a GTX 1080 with 8GB of VRAM!

There’s still no word (as of 11/28) on official SVD support in Automatic1111.

If you’d like to try SVD on Google Colab, this workbook works on the Free Tier; https://github.com/sagiodev/stable-video-diffusion-img2vid/. Generation time varies, but is generally around 2 minutes on a V100 GPU.

You’ll need to download one of the SVD models, from the links below, placing them in the ComfyUI/models/checkpoints directory

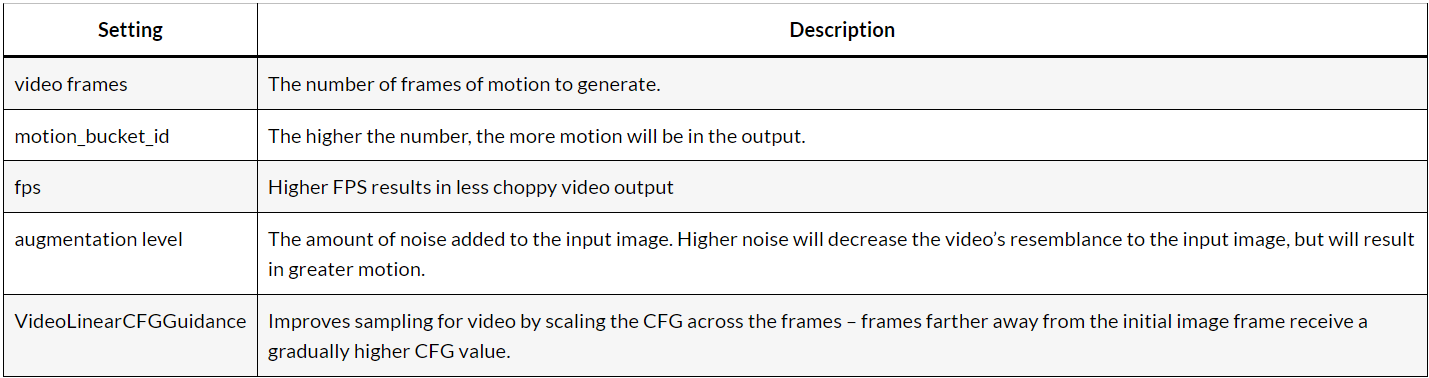

After updating your ComfyUI installation, you’ll see new nodes for VideoLinearCFGGuidance and SVD_img2vid _Conditioning. The Conditioning node takes the following inputs;

You can download ComfyUI workflows for img2video and txt2video below, but keep in mind you’ll need to have an updated ComfyUI, and also may be missing additional nodes for Video. I recommend using the ComfyUI Manager to identify and download missing nodes!

Suggested Settings

The settings below are suggested settings for each SVD component (node), which I’ve found produce the most consistently useable outputs, with the img2vid and img2vid-xt models.

Settings – Img2vid-xt-1.1

February 2024 saw the release of a finetuned SVD model, version 1.1. This version only works with a very specific set of parameters to improve the consistency of outputs. If using the Img2vid-xt-1.1 model, the following settings must be applied to produce the best results;

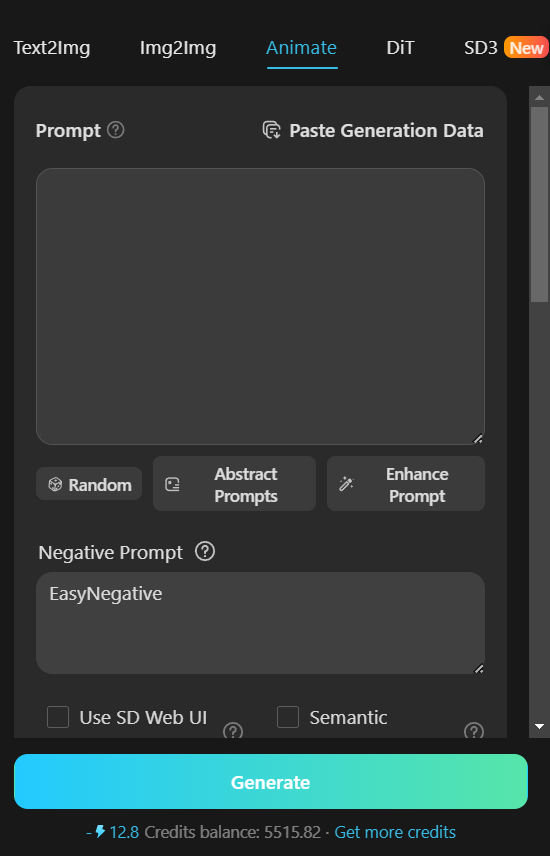

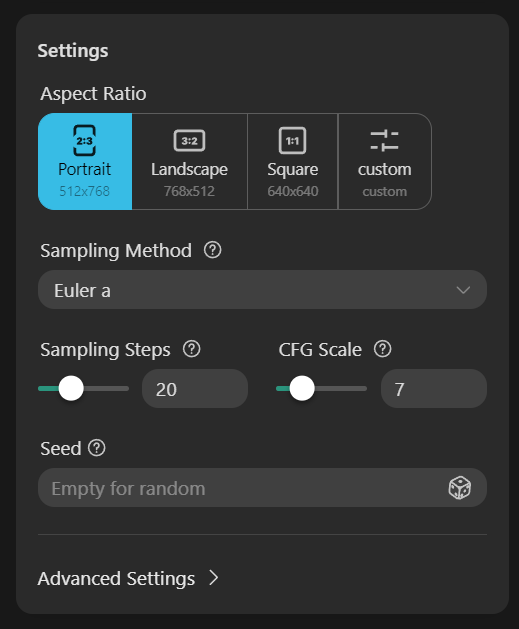

The easiest way to generate videos

in tensor.art, you can generate videos very easily compared to the explanation above, all you need to do is input the prompt you want, select the model you like, set the ratio and set the frame in the animatediff menu.

Output Examples

Limitations

It’s not perfect! Currently there are a few issues with the implementation, including;

Generations are short! Only <=4 second generations are possible, at present.

Sometimes there’s no motion in the outputs. We can tweak the conditioning parameters, but sometimes the images just refuse to move.

The models cannot be controlled through text.

Faces, and bodies in general, often aren’t the best!