Stable DIfusion Intro.

Stable Diffusion is an open-source text-to-image AI model that can generate amazing images from given text in seconds. The model was trained on images in the LAION-5B dataset (Large-scale Artificial Intelligence Open Network). It was developed by CompVis, Stable AI and RunwayML. All research artifacts from Stability AI are intended to be open sourced.

Promp Engineering.

Prompt Engineering is the process of structuring words that can be interpreted and understood by a text-to-image model. Is the language you need to speak in order to tell an AI model what to draw.

A well-written prompt consisting of keywords and good sentence structure.

Ask yourself a list of questions once you have in mind something.

Do you want a photo or a painting, digital art?

What’s the subject: a person, an animal the painting itself?

What details are part of your idea?

Special lighting: soft, ambient, etc.

Environment: indoor, outdoor, etc.

Colo scheme: vibrant, muted, etc.

Shot: front, from behind, etc.

Background: solid color, forest, etc.

What style: illustration, 3D render, movie poster?

The order of words is important.

The order and presentation of our desired output is almost as an important aspect as the vocabulary itself. It is recommended to list your concepts explicitly and separately than trying to cramp it into one simple sentence.

Keywords and Sub-Keywords.

Keywords are words that can change the style, format, or perspective of the image. There are certain magic words or phrases that are proven to boost the quality of the image. sub-keywords are those who belong to the semantic group of keywords; hierarchy is important for prompting as well for LoRAS or Models design.

Classifier Free Guidance (CFG default is 7)

You can understand this parameter as “Ai Creativity vs {{user}} prompt”. Lower numbers give Ai more freedom to be creative, while higher numbers force it to stick to the prompt.

CFG {2, 6}: if you’re discovering, testing or researching for heavy Ai influence.

CFG {7, 10}: if you have a solid prompt but you still want some creativity.

CFG {10, 15}: if your prompt is solid enough and you do not want Ai disturbs your idea.

CFG {16, 20}: Not recommended, uncoherency.

Step Count

Stable Diffusion creates an image by starting with a canvas full of noise and denoise it gradually to reach the final output, this parameter controls the number of these denoising steps. Usually, higher is better but to a certain degree, for beginners it’s recommended to stick with the default.

Seed

Seed is a number that controls the initial noise. The seed is the reason that you get a different image each time you generate when all the parameters are fixed. By default, on most implementations of Stable Diffusion, the seed automatically changes every time you generate an image. You can get the same result back if you keep the prompt, the seed and all other parameters the same.

⚠️ Seeding is important for your creations, so try to save a good seed and slightly tweak the prompt to get what you’re looking for while keeping the same composition.

Sampler

Diffusion samplers are the method used to denoise the image during generation, they take different durations and different number of steps to reach a usable image. This parameter affects the step count significantly; a refined one could reduce or increase the step count giving more or less subjective detail.

CLIP Skip

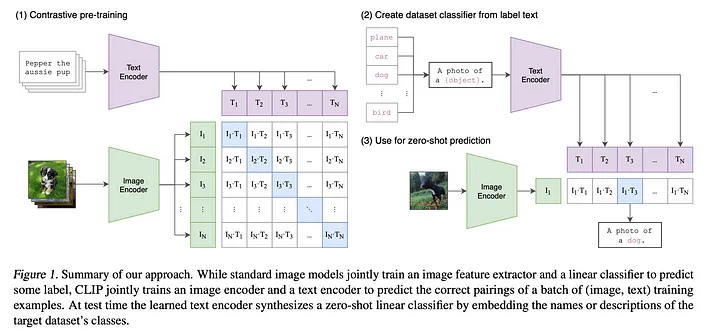

First of all we need to know what CLIP is. CLIP, which stands for Contrastive Language Image Pretraining is a multi-modal model trained on 400 million (image, text) pairs. During the training process, a text and image encoder are jointly trained to predict which caption goes with which image as shown in the diagram below.

Just think on this like the size like a funnel which uses SD to comb obtained information from its dataset; big numbers result in many information to process, so the final image is not presize. Lower numbers narrow down the captions on the dataset, so you'd get more accurated results.

Clip Skip {1}: Strong concidences and less liberty.

Clip Skip {2}: Nicer concidences and few liberty.

Clip Skip {3-5}: Many concidences and high liberty.

Clip Skip {6}: Unexpeted results.

ENSD (Eta Noise Seed Delta)

Its like a slider for the seed parameter; you can get different image results for a fixed seed number. So... what is the optimal number? There is not. Just use your lucky number, you're ponting the seeding to this number. If you are using a random seed every time, ENSD is irrelevant.

So why people use 31337 commonly? Known as eleet or leetspeak, is a system of modified spellings used primarily on the Internet. Its a cabalistic number, its safe using any other number.